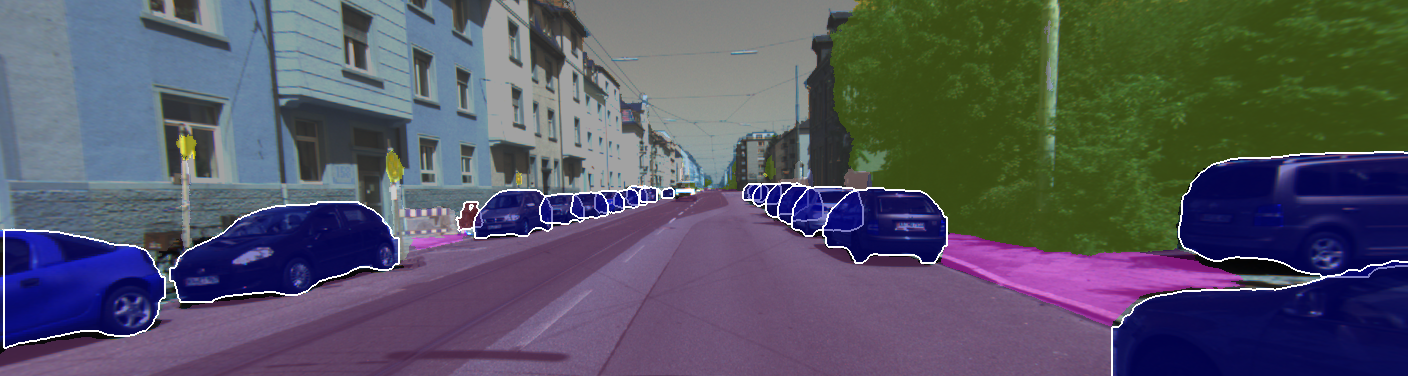

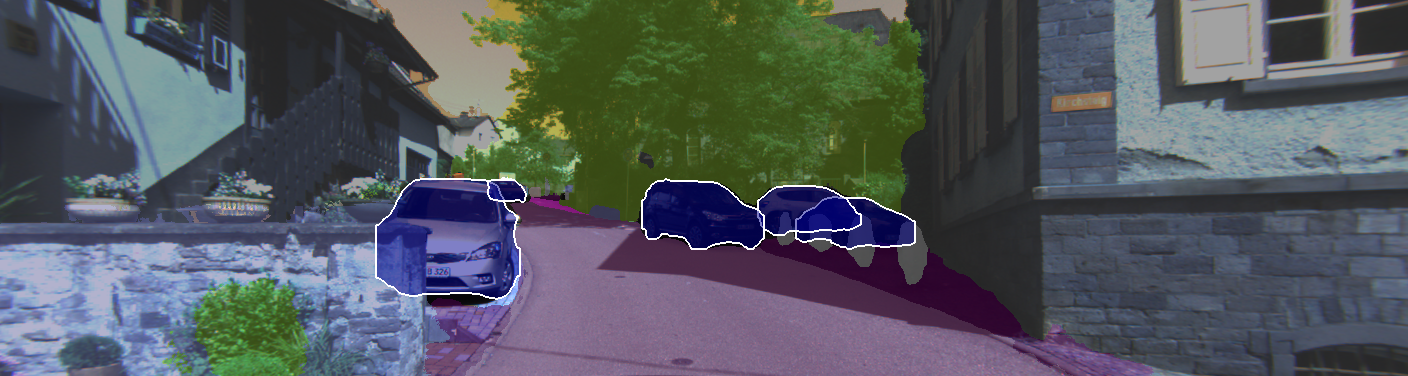

Humans rely on their ability to perceive complete physical structures of objects even when they are only partially visible, to navigate through their daily lives. This ability, known as amodal perception, serves as the link that connects our perception of the world to its cognitive understanding. However, unlike humans, robots are limited to modal perception, which restricts their ability to emulate the visual experience that humans have. In this work, we bridge this gap by proposing the amodal panoptic segmentation task.

Amodal Panoptic Segmentation

Rohit Mohan and Abhinav Valada